使用AIAK-Inference 加速推理业务

更新时间:2025-06-06

前提条件

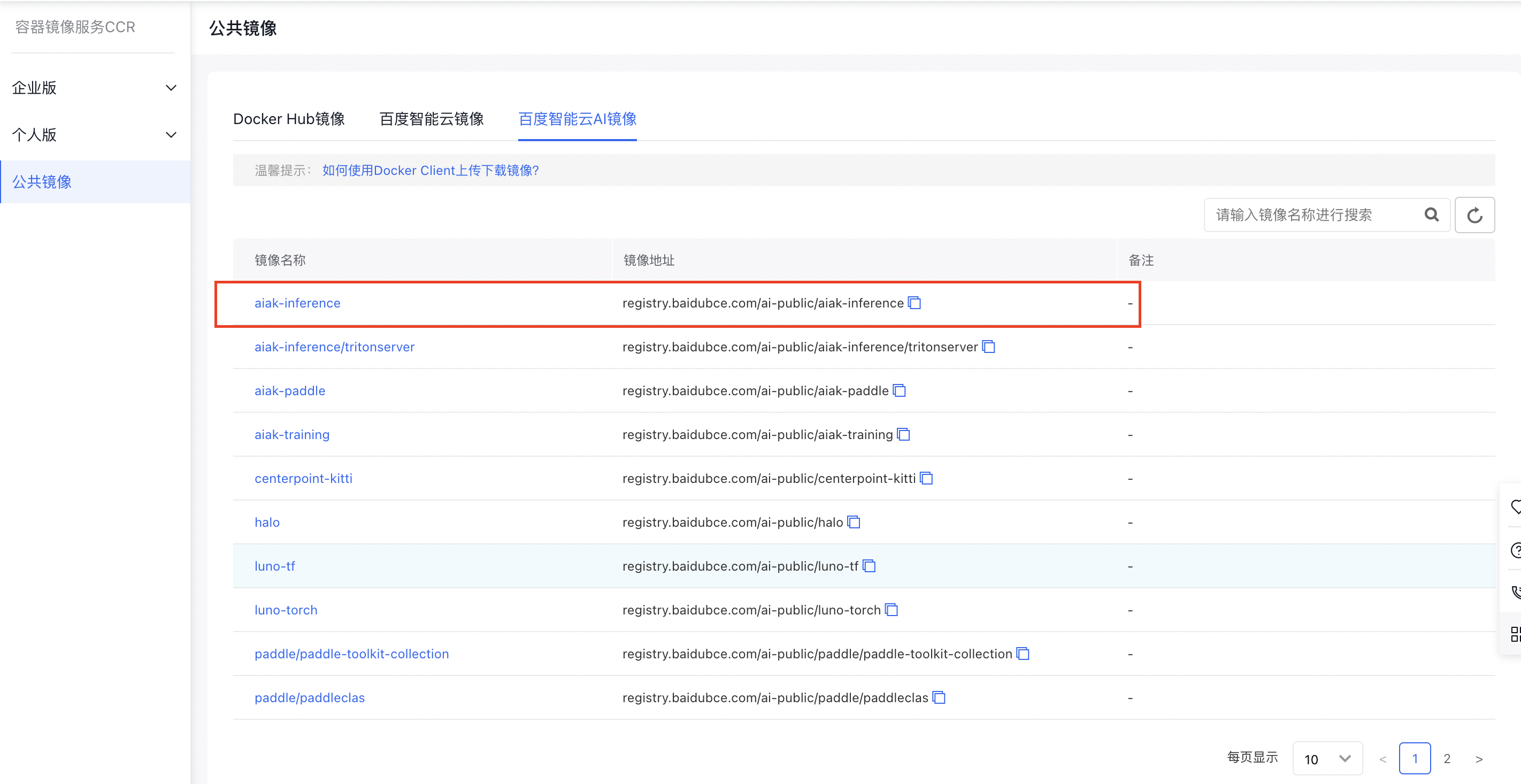

- 选择CCR中的AIAK-Inference推理加速镜像作为基础镜像。

操作流程

Tensorflow模型优化

在CCR公共镜像的“百度智能云AI镜像”中选择aiak-inference:ubuntu18.04-cu11.2-tf2.4.1-py3.6-aiak1.1-latest这个tag(或使用docker pull registry.baidubce.com/ai-public/aiak-inference:ubuntu18.04-cu11.2-tf2.4.1-py3.6-aiak1.1-latest拉取镜像),镜像内部已经安装好了CUDA、Tensorflow 2.4.1等基础库。

在容器内准备ResNet50模型:

Plain Text

1import os

2import numpy as np

3import tensorflow.compat.v1 as tf

4tf.compat.v1.disable_eager_execution()

5

6def _wget_demo_tgz():

7 # 下载一个公开的resnet50模型。

8 url = 'http://url/to/your/model/YOUR_MODEL.tar.gz'

9 local_tgz = os.path.basename(url)

10 local_dir = local_tgz.split('.')[0]

11 if not os.path.exists(local_dir):

12 luno.util.wget(url, local_tgz)

13 luno.util.unpack(local_tgz)

14 model_path = os.path.abspath(os.path.join(local_dir, "frozen_inference_graph.pb"))

15 graph_def = tf.GraphDef()

16 with open(model_path, 'rb') as f:

17 graph_def.ParseFromString(f.read())

18 # 以随机数作为测试数据。

19 test_data = np.random.rand(1, 800, 1000, 3)

20 return graph_def, {'image_tensor:0': test_data}

21

22graph_def, test_data = _wget_demo_tgz()

23

24input_nodes=['image_tensor']

25output_nodes = ['detection_boxes', 'detection_scores', 'detection_classes', 'num_detections', 'detection_masks']然后尝试推理这个模型:

Plain Text

1import time

2

3def benchmark(model):

4 tf.reset_default_graph()

5 with tf.Session() as sess:

6 sess.graph.as_default()

7 tf.import_graph_def(model, name="")

8 # Warmup!

9 for i in range(0, 1000):

10 sess.run(['image_tensor:0'], test_data)

11 # Benchmark!

12 num_runs = 1000

13 start = time.time()

14 for i in range(0, num_runs):

15 sess.run(['image_tensor:0'], test_data)

16 elapsed = time.time() - start

17 rt_ms = elapsed / num_runs * 1000.0

18 # Show the result!

19 print("Latency of model: {:.2f} ms.".format(rt_ms))

20

21# original graph

22print("before compile:")

23benchmark(graph_def)接下来引入AIAK-Inference优化:

Plain Text

1import aiak_inference

2optimized_model = aiak_inference.optimize(

3graph_def,

4'gpu',

5outputs=['detection_boxes', 'detection_scores', 'detection_classes', 'num_detections', 'detection_masks']

6)优化后的模型仍然是一个GraphDef模型,可以使用同样的代码进行推理:

Plain Text

1# optimized graph

2print("after compile:")

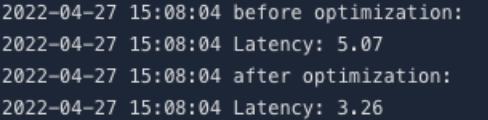

3benchmark(optimized_model)经过比较,可以看到性能有提升:

PyTorch模型优化

在CCR公共镜像的“AI加速镜像”中选择aiak-inference:cuda11.2_cudnn8_trt8.4_torch1.11-aiak_1.1.1_latest加速镜像(或使用docker pull registry.baidubce.com/ai-public/aiak-inference:cuda11.2_cudnn8_trt8.4_torch1.11-aiak_1.1.1_latest拉取镜像),镜像内部已经安装好了CUDA、PyTorch 1.11等基础库。

在容器中准备PyTorch相关模型,以ResNet50为例:

Plain Text

1import os

2import time

3import torch

4import torchvision.models as models

5

6model = models.resnet50().float().cuda()

7model = torch.jit.script(model).eval() # 使用jit转为静态图

8dummy = torch.rand(1, 3, 224, 224).cuda()尝试进行推理:

Plain Text

1@torch.no_grad()

2def benchmark(model, inp):

3 for i in range(100):

4 model(inp)

5 start = time.time()

6 for i in range(200):

7 model(inp)

8 elapsed_ms = (time.time() - start) * 1000

9 print("Latency: {:.2f}".format(elapsed_ms / 200))

10

11# benchmark before optimization

12print("before optimization:")

13benchmark(model, dummy)接着使用AIAK-Inference进行模型优化,并得到优化后的模型:

Plain Text

1import aiak_inference

2

3optimized_model = aiak_inference.optimize(

4model,

5'gpu',

6test_data=[dummy],

7)再次进行推理:

Plain Text

1# benchmark after optimization

2print("after optimization:")

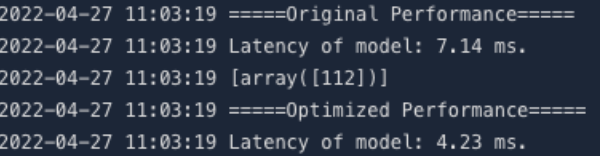

3benchmark(optimized_model, dummy)比较二者性能,可以看到单次推理延迟有大幅下降,证明AIAK-Inference加速能力: