DataX 读写 BOS

更新时间:2024-08-15

DataX

DataX 是一个异构数据源离线同步工具,致力于实现包括关系型数据库(MySQL、Oracle等)、HDFS、Hive、ODPS、HBase、FTP等各种异构数据源之间稳定高效的数据同步功能。

配置

- 下载并解压 DataX;

- 下载BOS-HDFS 解压缩后,将 jar 包拷贝到 DataX 解压路径plugin/reader/hdfsreader/libs/ 以及 plugin/writer/hdfswriter/libs/ 下;

- 打开 DataX 解压目录下的 bin/datax.py 脚本,修改脚本中的 CLASS_PATH 变量为如下:

Bash

1CLASS_PATH = ("%s/lib/*:%s/plugin/reader/hdfsreader/libs/*:%s/plugin/writer/hdfswriter/libs/*:.") % (DATAX_HOME, DATAX_HOME, DATAX_HOME)开始

示例

将 {your bucket} 下的 testfile 文件读出并写入到 {your other bucket} 存储桶。

testfile:

Bash

11 hello

22 bos

33 worldbos2bos.json:

JSON

1{

2 "job": {

3 "setting": {

4 "speed": {

5 "channel": 1

6 },

7 "errorLimit": {

8 "record": 0,

9 "percentage": 0.02

10 }

11 },

12 "content": [{

13 "reader": {

14 "name": "hdfsreader",

15 "parameter": {

16 "path": "/testfile",

17 "defaultFS": "bos://{your bucket}/",

18 "column": [

19 {

20 "index": 0,

21 "type": "long"

22 },

23 {

24 "index": 1,

25 "type": "string"

26 }

27 ],

28 "fileType": "text",

29 "encoding": "UTF-8",

30 "hadoopConfig": {

31 "fs.bos.endpoint": "bj.bcebos.com",

32 "fs.bos.impl": "org.apache.hadoop.fs.bos.BaiduBosFileSystem",

33 "fs.bos.access.key": "{your ak}",

34 "fs.bos.secret.access.key": "{your sk}"

35 },

36 "fieldDelimiter": " "

37 }

38 },

39 "writer": {

40 "name": "hdfswriter",

41 "parameter": {

42 "path": "/testtmp",

43 "fileName": "testfile.new",

44 "defaultFS": "bos://{your other bucket}/",

45 "column": [{

46 "name": "col1",

47 "type": "string"

48 },

49 {

50 "name": "col2",

51 "type": "string"

52 }

53 ],

54 "fileType": "text",

55 "encoding": "UTF-8",

56 "hadoopConfig": {

57 "fs.defaultFS": ""

58 "fs.bos.endpoint": "bj.bcebos.com",

59 "fs.bos.impl": "org.apache.hadoop.fs.bos.BaiduBosFileSystem",

60 "fs.bos.access.key": "{your ak}",

61 "fs.bos.secret.access.key": "{your sk}"

62 },

63 "fieldDelimiter": " ",

64 "writeMode": "append"

65 }

66 }

67 }]

68 }

69}按需替换配置中的 {your bucket}、endpoint、{your sk} 等选项;

支持仅 reader 或 writer 配置为 BOS。

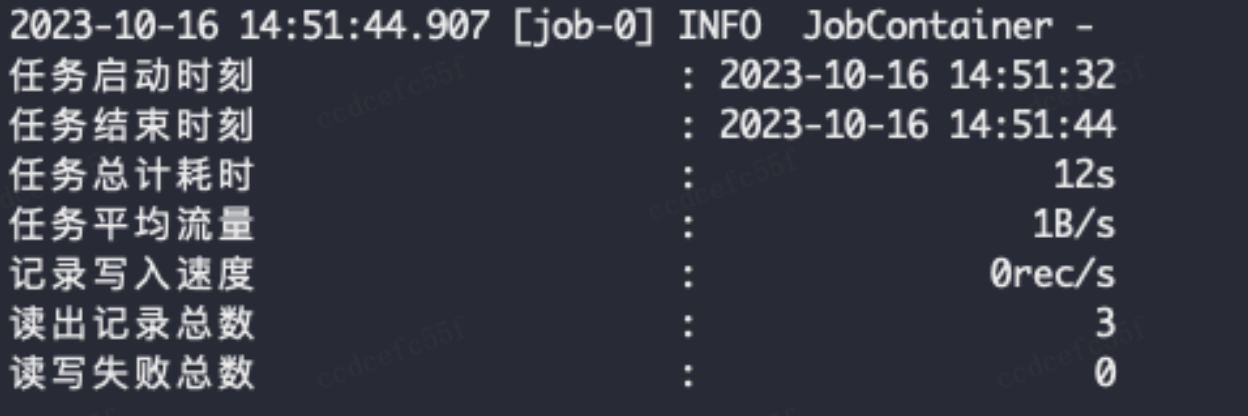

结果

Python

1python bin/datax.py bos2bos.json执行成功后返回: