端到端语音语言大模型iOS SDK

1. 文档简介

1.1 文档说明

| 文档名称 | 端到端语音语言大模型集成文档 |

|---|---|

| 所属平台 | iOS |

| 提交日期 | 2025-05-15 |

| 概述 | 本文档是百度语音开放平台iOS SDK的用户指南,描述了端到端语音语言大模型相关接口的使用说明。 |

获取安装包 |

端到端语音语言大模型iOS SDK |

1.2 申请试用

本接口处于公测阶段,免费调用额度在进入控制台时自动获取。

2. 开发准备工作

2.1 环境准备

| 名称 | 版本号 |

|---|---|

| 语音识别 | 3.3.2.3 |

| 系统支持 | 支持iOS 12.0及以上系统 |

| 架构支持 | armv7、arm64、 |

| 开发环境 | 工程内使用了LTO等优化选项,建议使用最新版本Xcode进行开发 |

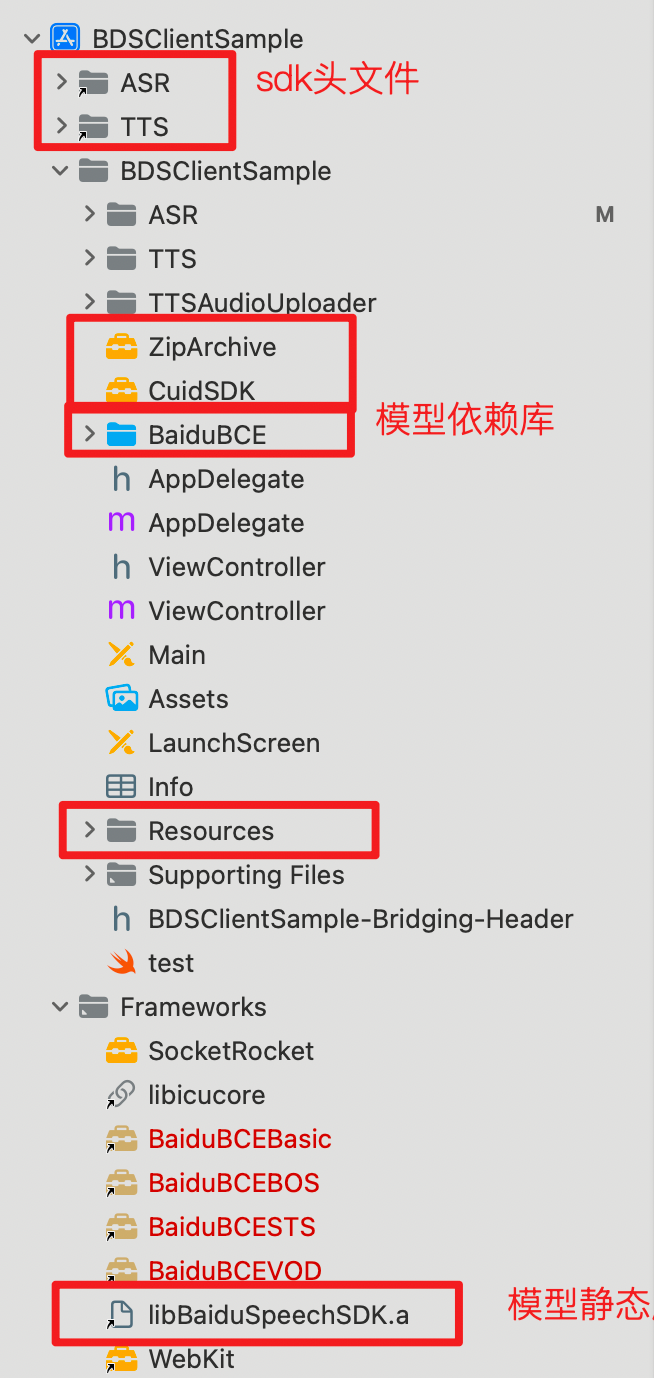

| libBaiduSpeechSDK.a | 端到端模型依赖静态库 |

2.2 SDK目录结构

2.3 SDK安装

- 获取安装包:端到端语音语言大模型iOS SDK

- 将BDSClientHeaders/ASR、BDSClientHeaders/TTS sdk头文件并添加libBaiduSpeechSDK.a静态库到您的项目中

- framework依赖以及系统必要依赖:

| Framework | 描述 |

|---|---|

| libc++.tbd | 提供对C/C++特性支持 |

| libz.1.2.5.tbd | 提供gzip支持 |

| libsqlite3.0.tbd | 提供对本地数据库的支持 |

| AudioToolbox | 提供录音和播放支持 |

| AVFoundation | 提供录音和播放支持 |

| CFNetwork | 提供对网络访问的支持 |

| CoreLocation | 提供对获取设备地理位置的支持,以提高识别准确度 |

| CoreTelephony | 提供对移动网络类型判断的支持 |

| SystemConfiguration | 提供对网络状态检测的支持 |

| GLKit | 内置识别控件所需 |

| BaiduBCEBasic | 基于BaiduBCE文件下的模型内部依赖库 |

| BaiduBCEBOS | |

| BaiduBCESTS | |

| BaiduBCEVOD | |

| ZipArchive | 合成tts功能依赖库 |

| CuidSDK |

1// 参考demo中ASRViewController.mm类文件引入所需头文件

2例:

3#import "ASRViewController.h"

4#import "BDSASRDefines.h"

5#import "BDSASRParameters.h"

6#import "BDSWakeupDefines.h"

7#import "BDSWakeupParameters.h"

8#import "BDSUploaderDefines.h"

9#import "BDSUploaderParameters.h"

10#import "BDSEventManager.h"

11#import "BDVRSettings.h"

12#import "fcntl.h"

13#import "AudioInputStream.h"

14#import "BDSSpeechSynthesizer.h"

15#import "TTDFileReader.h"

16#import "BDSCacheReferenceManager.h"

17#import "ASRPlayTestConfigViewController.h"

18#import "BDSOTADefines.h"

19#import "BDSOTAParameters.h"

20#import "BDSCompressionManager.h"

21#include <sys/socket.h>

22#include <netinet/in.h>

23#include <errno.h>

24#import <mach/mach.h>

25#import <AVFoundation/AVAudioSession.h>2.4 鉴权方式

2.4.1 access_token鉴权机制

- 获取AK/SK

请参考通用参考 - 鉴权认证机制 | 百度AI开放平台 中的access_token鉴权机制获取AK/SK, 并得到AppID、API Key、Secret Key三个信息

- 在初始化SDK时,传入认证信息。详细参数参考"初始化SDK"

1// ... 其他参数已忽略

2[self.asrEventManager setParameter:@"开放平台获取的 appid" forKey:BDS_ASR_APP_ID]; // appid字段

3[self.asrEventManager setParameter:@"开放平台获取 apikey" forKey:BDS_ASR_API_KEY]; // apikey字段2.4.2 API Key鉴权机制

注意: 邀测阶段暂时仅支持access_token鉴权机制

3. SDK集成

3.1 功能接口

SDK中主要的类和接口如下:

- BDSEventManager:语音事件管理类,用于管理语音识别、语音合成等事件。

- BDSSpeechSynthesizer: 语音合成类,用于管理语音合成和播放

- BDSASRParameters.h、BDSSpeechSynthesizerParams.h: 包含语音识别、语音合成等参数的key常量

- VoiceRecognitionClientWorkStatus(协议方法): 遵循语音识别协议,实现协议方法用于处理语音识别过程中产生的各种事件并响应完成回调。

- BDSSpeechSynthesizerDelegate(协议): 遵循语音合成协议,通过协议方法处理语音合成过程中产生的各种事件

3.1.1 BDSEventManager:语音识别操作类

-

方法列表

-

SDKInitial: 初始化SDK

- 功能说明:在应用启动后执行一次,不可重复调用。

-

输入参数

-

configVoiceRecognitionClient:初始化sdk,配置sdk参数

- asr参数:通过setParameter..forkey 方法设置, 可用参数见下表:

-

-

| 事件参数 | 类型/值 | 是否必须 | 描述 |

|---|---|---|---|

| BDS_ASR_PRODUCT_ID | String | 是 | 识别环境ID,1843 |

| deviceID | String | 是 | 设备唯一id,通过getDeviceId方法获取(不允许手动传入) |

| PID | String | 是 | 识别环境 id,1843 |

| APP_KEY | String | 是 | 识别环境 key,默认值:com.baidu.app |

| URL | String | 是 | 识别环境 url, 默认值https://vop.baidu.com/v2 |

| APP_ID | String | 是 | 开放平台创建应用后分配的鉴权信息,上线后请使用此参数填写鉴权信息。参考 "2.4 鉴权方式" |

| APP_API_KEY | String | 是 | 开放平台创建应用后分配的鉴权信息,上线后请使用此参数填写鉴权信息。参考 "2.4 鉴权方式" |

| BDS_ASR_COMPRESSION_TYPE | String | 是 | 必传,音频压缩类型, 默认值:EVR_AUDIO_COMPRESSION_OPUS可选值:EVR_AUDIO_COMPRESSION_BV32 ,建议使用默认OPUS |

| TRIGGER_MODE | int | 是 | 1: 点按识别(iOS不区分点按或长按); 2: 唤醒后识别考"4.2 开发场景" 配置 |

| ASR_ENABLE_MUTIPLY_SPEECH | int | 可选 | 是否启动全双工 默认双工 |

| BDS_ASR_MODEL_VAD_DAT_FILE | String | vad 资源路径,"初始化环境"时拷贝的资源路径 | |

| LOG_LEVEL | int | 可选 | 设置日志等级,可选值:EVRDebugLogLevelOff = 0, 默认EVRDebugLogLevelFatal = 1,EVRDebugLogLevelError = 2,EVRDebugLogLevelWarning = 3,EVRDebugLogLevelInformation = 4,EVRDebugLogLevelDebug = 5,EVRDebugLogLevelTrace = 6 全量 |

1 * 识别回调: VoiceRecognitionClientWorkStatus, 识别协议接口, 详情请参考VoiceRecognitionClientWorkStatus

2* BDS_ASR_CMD_STOP: 停止语音识别

3 * 功能说明:停止当前语音识别,并保留当前的识别结果,已经发送的音频会被正常处理。

4 * 输入参数:无

5 * 返回值: 无

6* BDS_ASR_CMD_CANCEL: 取消语音识别

7 * 功能说明:取消语音识别,并停止后续处理,已经发送未处理的音频不会继续处理。

8 * 输入参数:无

9 * 返回值: 无

10* BDS_ASR_CMD_PAUSE: 暂停语音识别

11 * 功能说明:暂停语音识别 // TODO: 待补充细节

12 * 输入参数:无

13 * 返回值: 无

14* BDS_ASR_CMD_RESUME: 恢复语音识别

15 * 功能说明:恢复语音识别 // TODO: 待补充细节

16 * 输入参数:无

17 * 返回值: 无

18 3.1.2 VoiceRecognitionClientWorkStatus:语音识别事件监听

用户需要实现此接口,在启动识别时传入。

- 方法列表

-

(void)VoiceRecognitionClientWorkStatus:(int)workStatus obj:(id)aObj;

-

workStatus: 接收识别过程中产生的回调事件

-

输入参数

- workStatus: enum, 识别回调状态, 具体事件请参考下表

- obj: id, 事件回调音频数据对象,需解析。

-

返回值

- 无

-

-

| 识别回调状态 | 回调参数 | 类型 | 说明 |

|---|---|---|---|

| EVoiceRecognitionClientWorkStatusStartWorkIng | 枚举 | 准备就绪,可以说话,⼀般在收到此事件后通过UI通知⽤户可以说话了 | |

| EVoiceRecognitionClientWorkStatusStart | 枚举 | 检测到开始说话 | |

| EVoiceRecognitionClientWorkStatusFirstPlaying | 枚举 | tts首包播放 | |

| EVoiceRecognitionClientWorkStatusEndPlaying | 枚举 | tts 播放完成 | |

| EVoiceRecognitionClientWorkStatusCancel | 枚举 | 用户取消识别 | |

| EVoiceRecognitionClientWorkStatusFlushData | 返回json字符串,格式为:

|

枚举 | 语音识别中间结果返回 |

| EVoiceRecognitionClientWorkStatusEnd | 枚举 | 本地声音采集结束,等待识别结果返回并结束录音 | |

| EVoiceRecognitionClientWorkStatusNewRecordData | 枚举 | 录音数据回调(对应识别中间结果) | |

| EVoiceRecognitionClientWorkStatusChunkTTS | 返回json字符串,格式为:

|

枚举 | TTS 数据返回 |

| EVoiceRecognitionClientWorkStatusFinish | 返回json字符串,格式为:

|

枚举 | 识别完成,服务器返回结果 |

3.1.3 SpeechSynthesizer:语音合成操作类

-

方法列表

-

[[BDSSpeechSynthesizer sharedInstance] setSynthesizerDelegate:self];:语音合成协议监听器, 需要实现BDSSpeechSynthesizerDelegate协议接口, 详情请参考SpeechSynthesizerListener

- 功能说明:设置语音合成回调协议实现合成回调,

- 返回值: 无

-

speakSentence:(NSString*)sentence withError:(NSError**)err 播放音频

- 功能说明:合成播放音频接口

-

输入参数:

- sentence: NSString, 音频信息(包含本地文本txt、文字字符串)

- withError:回调错误信息

-

3.1.4 BDSSpeechSynthesizerDelegate:语音合成协议监听

用户需要遵循协议,可以监听音频播放器的播放事件

- 方法列表

@optional

- (void)synthesizerNewDataArrived:(NSData *)newData DataFormat:(BDSAudioFormat)fmt characterCount:(int)newLength sentenceNumber:(NSInteger)SynthesizeSentence;

- 功能说明:语音合成

- 输入参数:

- newData: NSData, 语音合成响应.

- fmt:BDSAudioFormat 传递的缓冲区中的音频格式

- newLength:int 当前句子的当前合成字符数

- SynthesizeSentence:NSInteger 合成句子ID由SDK生成,并返回

其余协议方法可参考BDSSpeechSynthesizerDelegate.h头文件说明

3.2 集成步骤

3.2.1 初始化环境

包括几个步骤:

- 将VAD模型文件和AEC模型文件拷贝到设备的目录下

1[self configVADFile];

2 - (void)configVADFile {

3 NSString *vad_filepath = [[NSBundle mainBundle] pathForResource:@"BDSClientSDK.bundle/EASRResources/chuangxin.ota.v1.vad" ofType:@"pkg"];

4 [self.asrEventManager setParameter:vad_filepath forKey:BDS_ASR_MODEL_VAD_DAT_FILE];

5}

6[self configAECVADDebug];

7- (void)configAECVADDebug {

8 [self.asrEventManager setParameter:@(YES) forKey:BDS_MIC_SAVE_AEC_DEBUG_FILE];

9 [self.asrEventManager setParameter:@(YES) forKey:BDS_MIC_SAVE_VAD_DEBUG_FILE];

10 [self.asrEventManager setParameter:@(YES) forKey:BDS_MIC_SAVE_WAKEUP_DEBUG_FILE];

11}-

申请需要的权限

- 需要的权限列表:

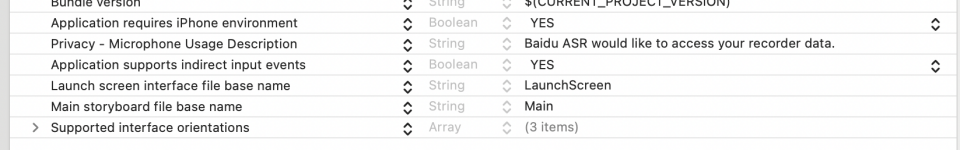

| 权限 | 说明 | 是否必须 |

|---|---|---|

| Privacy - Microphone Usage Description | 麦克风权限 | 是 |

| Application supports indirect input events | 支持硬件设备输入 | 是 |

权限参考info.plist

3.2.2 实现事件回调函数

- 语音识别回调:即实现协议接口, 示例代码如下:详细参数说明参考VoiceRecognitionClientWorkStatus

1- (void)VoiceRecognitionClientWorkStatus:(int)workStatus obj:(id)aObj {

2 switch (workStatus) {

3 case EVoiceRecognitionClientWorkStatusNewEncodeData:

4 {

5 if (self.encodeFileHandler == nil) {

6 self.encodeFileHandler = [self createFileHandleWithName:@"audio_encode" isAppend:NO];

7 }

8 [self.encodeFileHandler writeData:(NSData *)aObj];

9 break;

10 }

11 // 录音数据回调

12 case EVoiceRecognitionClientWorkStatusNewRecordData: {

13 if (!self.isPlayTesting) {

14 [self.fileHandler writeData:(NSData *)aObj];

15 }

16 break;

17 }

18 // 识别工作开始,开始采集及处理数据

19 case EVoiceRecognitionClientWorkStatusStartWorkIng: {

20 if (!self.isPlayTesting) {

21 if (self.currentPlayTones & ASRVoiceRecognitionPlayTonesTypeStart) {

22 [self playTone:@"record_start.pcm"];

23 }

24

25 NSDictionary *logDic = [self parseLogToDic:aObj];

26 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK: start vr, log: %@\n", logDic]];

27 [self onStartWorking];

28 }

29 break;

30 }

31 // 检测到用户开始说话

32 case EVoiceRecognitionClientWorkStatusStart: {

33 if (!self.isPlayTesting) {

34 NSLog(@"====EVoiceRecognitionClientWorkStatusStart: %@", aObj);

35 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK: detect voice start point, sn: %@.\n", aObj]];

36 }

37

38 [self cancelTTSWithSN:cTTS_ASR_SN];

39 break;

40 }

41 // 本地声音采集结束,等待识别结果返回并结束录音

42 case EVoiceRecognitionClientWorkStatusEnd: {

43 if (!self.isPlayTesting) {

44 if (self.currentPlayTones & ASRVoiceRecognitionPlayTonesTypeEnd) {

45 [self playTone:@"record_end.pcm"];

46 }

47

48 NSLog(@"====EVoiceRecognitionClientWorkStatusEnd: %@", aObj);

49 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK: detect voice end point, sn: %@.\n", aObj]];

50 }

51 break;

52 }

53 // 连续上屏

54 case EVoiceRecognitionClientWorkStatusFlushData: {

55 if (!self.isPlayTesting) {

56 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK: partial result - %@.\n\n", [self getDescriptionForDic:aObj]]];

57 }

58 break;

59 }

60 // 语音识别功能完成,服务器返回正确结果

61 case EVoiceRecognitionClientWorkStatusFinish: {

62 if (!self.isPlayTesting) {

63 if (self.currentPlayTones & ASRVoiceRecognitionPlayTonesTypeSuccess) {

64 [self playTone:@"record_success.pcm"];

65 }

66

67 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK: final result - %@.\n\n", [self getDescriptionForDic:aObj]]];

68 if (aObj) {

69 self.resultTextView.text = [self getDescriptionForDic:aObj];

70 }

71 if (!self.longSpeechFlag && !(self.enableMutiplySpeech || self.audioFileType == AudioFileType_MutiplySpeech_ASR || self.audioFileType == AudioFileType_MutiplySpeech_Wakeup)) {

72 [self onEnd];

73 }

74 } else {

75 // 播测全双工场景,20s有finalResult则不停止

76 self.playTestShouldStop = NO;

77 if (self.playTestSceneType == ASRPlayTestSceneType_BaseLine) { // 基线播测:FinalResult里播报一句query

78 [self stopTTS];

79 [[BDSSpeechSynthesizer sharedInstance] speakSentence:@"北京限行尾号 1、6" withError:nil];

80 }

81 }

82 break;

83 }

84 // 当前音量回调

85 case EVoiceRecognitionClientWorkStatusMeterLevel: {

86 if (!self.isPlayTesting) {

87 // [self printLogTextView:[NSString stringWithFormat:@"Current Level: %d\n", [aObj intValue]]];

88 }

89 break;

90 }

91 // 用户取消

92 case EVoiceRecognitionClientWorkStatusCancel: {

93 if (!self.isPlayTesting) {

94 if (self.currentPlayTones & ASRVoiceRecognitionPlayTonesTypeCancel) {

95 [self playTone:@"record_cancel.pcm"];

96 }

97

98 [self printLogTextView:@"CALLBACK: user press cancel.\n"];

99 [self onEnd];

100 if (self.shouldStartASR) {

101 self.shouldStartASR = NO;

102 [self startWakeup2ASR];

103 }

104

105 if (self.randomStress) {

106 [self wp_rec_randomStress];

107 } else if (self.randomStressASR || self.randomStressMutiplyASR) {

108 dispatch_after(dispatch_time(DISPATCH_TIME_NOW, NSEC_PER_SEC * 0.5), dispatch_get_main_queue(), ^{

109 [self voiceRecogButtonHelper:EVR_TRIGGER_MODE_CLICK];

110 });

111 }

112 } else {

113 if (self.shouldStartASR) {

114 self.shouldStartASR = NO;

115 [self startWakeup2ASR];

116 }

117 }

118 break;

119 }

120 case EVoiceRecognitionClientWorkStatusError: {

121 if (!self.isPlayTesting) {

122 if (self.currentPlayTones & ASRVoiceRecognitionPlayTonesTypeFail) {

123 [self playTone:@"record_fail.pcm"];

124 }

125

126 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK: encount error - %@.\n", (NSError *)aObj]];

127 int errorcode = (int)[(NSError *)aObj code];

128 // ota load engine error 需要重新调用""

129 // [self.otaEventManger sendCommand:BDS_OTA_INIT withParameters:otaDic];

130 // 用法详见:confOTATest 方法

131 if (errorcode == EVRClientErrorCodeLoadEngineError) {

132 dispatch_async(dispatch_get_global_queue(0, 0), ^{

133 [self.asrEventManager sendCommand:BDS_ASR_CMD_STOP]; // 先停止ASR引擎

134 [self confOTATest]; // 然后调用:BDS_OTA_INIT

135 [self.asrEventManager sendCommand:BDS_ASR_CMD_START]; //然后重启ASR引擎

136 });

137 }

138 // asr engine is busy.

139 if (errorcode == EVRClientErrorCodeASRIsBusy) {

140 if (self.asrEventManager.isAsrRunning) {

141 [self onEnd];

142 } else {

143 [self onStartWorking];

144 }

145 return;

146 }

147

148 if (!(self.enableMutiplySpeech

149 || self.audioFileType == AudioFileType_MutiplySpeech_ASR

150 || self.audioFileType == AudioFileType_MutiplySpeech_Wakeup) ||

151 (self.enableMutiplySpeech && (errorcode == EVRClientErrorCodeDecoderNetworkUnavailable

152 || errorcode == EVRClientErrorCodeRecoderNoPermission

153 || errorcode == EVRClientErrorCodeRecoderException

154 || errorcode == EVRClientErrorCodeRecoderUnAvailable

155 || errorcode == EVRClientErrorCodeInterruption

156 || errorcode == EVRClientErrorCodeCommonPropertyListInvalid))) { // 非全双工 || 全双工内部cancel

157 [self onEnd];

158 if (self.randomStressASR) {

159 dispatch_after(dispatch_time(DISPATCH_TIME_NOW, NSEC_PER_SEC * 0.5), dispatch_get_main_queue(), ^{

160 [self voiceRecogButtonHelper:EVR_TRIGGER_MODE_CLICK];

161 });

162 }

163 }

164 if (self.randomStress) {

165 [self wp_rec_randomStress];

166 }

167 }

168 break;

169 }

170 case EVoiceRecognitionClientWorkStatusLoaded: {

171 if (!self.isPlayTesting) {

172 [self printLogTextView:@"CALLBACK: offline engine loaded.\n"];

173 }

174 break;

175 }

176 case EVoiceRecognitionClientWorkStatusUnLoaded: {

177 if (!self.isPlayTesting) {

178 [self printLogTextView:@"CALLBACK: offline engine unLoaded.\n"];

179 }

180 break;

181 }

182 /*#SECTION_REMOVE_FROM:OPEN_PLATFORM*/

183 case EVoiceRecognitionClientWorkStatusChunkThirdData: {

184 if (!self.isPlayTesting) {

185 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK: Chunk 3-party data length: %lu\n", (unsigned long)[(NSData *)aObj length]]];

186 if (self.thirdTtsTest) {

187 if (self.isThirdFirst) {

188 self.isThirdFirst = NO;

189 } else {

190 self.isThirdFirst = YES;

191 [self stopTTS];

192 [self startThirdSynthesize];

193 }

194 }

195 }

196 break;

197 }

198 case EVoiceRecognitionClientWorkStatusChunkNlu: {

199 if (!self.isPlayTesting) {

200 NSString *nlu = [[NSString alloc] initWithData:(NSData *)aObj encoding:NSUTF8StringEncoding];

201 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK: Chunk NLU data: %@\n", nlu]];

202 }

203 break;

204 }

205 case EVoiceRecognitionClientWorkStatusChunkEnd: {

206 if (!self.isPlayTesting) {

207 NSLog(@"====EVoiceRecognitionClientWorkStatusChunkEnd");

208 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK: Chunk end, sn: %@.\n", aObj]];

209 if (!self.longSpeechFlag && !(self.enableMutiplySpeech || self.audioFileType == AudioFileType_MutiplySpeech_ASR || self.audioFileType == AudioFileType_MutiplySpeech_Wakeup)) {

210 [self onEnd];

211 }

212 if (self.randomStress) {

213 [self wp_rec_randomStress];

214 } else if (self.randomStressASR) {

215 dispatch_after(dispatch_time(DISPATCH_TIME_NOW, NSEC_PER_SEC * 0.5), dispatch_get_main_queue(), ^{

216 [self voiceRecogButtonHelper:EVR_TRIGGER_MODE_CLICK];

217 });

218 }

219 }

220 break;

221 }

222 case EVoiceRecognitionClientWorkStatusFeedback: {

223 if (!self.isPlayTesting) {

224 NSDictionary *logDic = [self parseLogToDic:aObj];

225 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK Feedback: %@\n", logDic]];

226 }

227 break;

228 }

229 case EVoiceRecognitionClientWorkStatusRecorderEnd: {

230 if (!self.isPlayTesting) {

231 [self printLogTextView:@"CALLBACK: recorder closed.\n"];

232 if (self.audioFileType == AudioFileType_MutiplySpeech_ASR || self.audioFileType == AudioFileType_ASR) {

233 [self asrGuanceRecorderClosed];

234 }

235 }

236 break;

237 }

238 case EVoiceRecognitionClientWorkStatusLongSpeechEnd: {

239 if (!self.isPlayTesting) {

240 [self printLogTextView:@"CALLBACK: Long Speech end.\n"];

241 [self onEnd];

242 }

243 break;

244 }

245 case EVoiceRecognitionClientWorkStatusStop: {

246 if (!self.isPlayTesting) {

247 [self printLogTextView:@"CALLBACK: user press stop.\n"];

248 [self onEnd];

249

250 if (self.randomStress) {

251 [self wp_rec_randomStress];

252 } else if (self.randomStressASR || self.randomStressMutiplyASR) {

253 dispatch_after(dispatch_time(DISPATCH_TIME_NOW, NSEC_PER_SEC * 0.5), dispatch_get_main_queue(), ^{

254 [self voiceRecogButtonHelper:EVR_TRIGGER_MODE_CLICK];

255 });

256 }

257 }

258 break;

259 }

260 case EVoiceRecognitionClientWorkStatusChunkTTS: {

261 if (!self.isPlayTesting) {

262 NSDictionary *dict = (NSDictionary *)aObj;

263 if (dict) {

264 NSDictionary *ttsResult = [dict objectForKey:@"origin_result"];

265 if (ttsResult && [ttsResult isKindOfClass:[NSDictionary class]]) {

266 NSString *txt = [ttsResult objectForKey:@"tex"];

267 if (txt.length) {

268 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK: ChunkTTS %@.\n", txt]];

269 }

270

271// [self playByAudioInfo:dict];

272 }

273// NSData *ttsData = [dict objectForKey:@"audio_data"];

274 cTTS_ASR_SN = [dict objectForKey:BDS_ASR_CANCEL_TTS_SN_A];

275 }

276 NSLog(@"Chunk TTS data: %@", [dict description]);

277 }

278 break;

279 }

280 case EVoiceRecognitionClientWorkStatusRecorderPermission: {

281 if (!self.isPlayTesting) {

282 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK: recorder permisson - %@.\n", [aObj objectForKey:BDS_ASR_RESP_RECORDER_PERMISSION]]];

283 }

284 break;

285 }

286 /*#SECTION_REMOVE_END*/

287 default:

288 break;

289 }

290}- 语音合成回调:即实现BDSSpeechSynthesizerDelegate协议接口, 示例代码如下:详细参数说明参考BDSSpeechSynthesizerDelegate

1#pragma mark - implement BDSSpeechSynthesizerDelegate

2- (void)synthesizerStartWorkingSentence:(NSInteger)SynthesizeSentence{

3 NSLog(@"Did start synth %ld", SynthesizeSentence);

4 [self.CancelButton setEnabled:YES];

5 [self.PauseOrResumeButton setEnabled:YES];

6}

7

8- (void)synthesizerFinishWorkingSentence:(NSInteger)SynthesizeSentence engineType:(BDSSynthesizerEngineType)type {

9 NSLog(@"Did finish synth: engineType:, %ld, %d", SynthesizeSentence, type);

10 if(!isSpeak){

11 if(self.synthesisTexts.count > 0 &&

12 SynthesizeSentence == [[[self.synthesisTexts objectAtIndex:0] objectForKey:@"ID"] integerValue]){

13 [self.synthesisTexts removeObjectAtIndex:0];

14 [self updateSynthProgress];

15 }

16 else{

17 NSLog(@"Sentence ID mismatch??? received ID: %ld\nKnown sentences:", (long)SynthesizeSentence);

18 for(NSDictionary* dict in self.synthesisTexts){

19 NSLog(@"ID: %ld Text:\"%@\"", [[dict objectForKey:@"ID"] integerValue], [((NSAttributedString*)[dict objectForKey:@"TEXT"]) string]);

20 }

21 }

22 if(self.synthesisTexts.count == 0){

23// [self.CancelButton setEnabled:NO];

24// [self.PauseOrResumeButton setEnabled:NO];

25 [self.PauseOrResumeButton setTitle:[[NSBundle mainBundle] localizedStringForKey:@"pause" value:@"" table:@"Localizable"] forState:UIControlStateNormal];

26 }

27 }

28}

29

30- (void)synthesizerSpeechStartSentence:(NSInteger)SpeakSentence{

31 NSLog(@"Did start speak %ld", SpeakSentence);

32}

33

34- (void)synthesizerSpeechEndSentence:(NSInteger)SpeakSentence{

35 NSLog(@"Did end speak %ld", SpeakSentence);

36 NSString *docDir = [NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) firstObject];

37 NSString *path = [docDir stringByAppendingPathComponent:[NSString stringWithFormat:@"test_speed_9_onnn.pcm", (long)SpeakSentence]];

38 [self.playerData writeToFile:path atomically:YES];

39

40 self.playerData = [[NSMutableData alloc] init];

41

42 if(self.synthesisTexts.count > 0 &&

43 SpeakSentence == [[[self.synthesisTexts objectAtIndex:0] objectForKey:@"ID"] integerValue]){

44 [self.synthesisTexts removeObjectAtIndex:0];

45 [self updateSynthProgress];

46 }

47 else{

48 NSLog(@"Sentence ID mismatch??? received ID: %ld\nKnown sentences:", (long)SpeakSentence);

49 for(NSDictionary* dict in self.synthesisTexts){

50 NSLog(@"ID: %ld Text:\"%@\"", [[dict objectForKey:@"ID"] integerValue], [((NSAttributedString*)[dict objectForKey:@"TEXT"]) string]);

51 }

52 }

53 if(self.synthesisTexts.count == 0){

54// [self.CancelButton setEnabled:NO];

55// [self.PauseOrResumeButton setEnabled:NO];

56 [self.PauseOrResumeButton setTitle:[[NSBundle mainBundle] localizedStringForKey:@"pause" value:@"" table:@"Localizable"] forState:UIControlStateNormal];

57 }

58

59 [self SynthesizeTapped:nil];

60}

61

62- (void)synthesizerNewDataArrived:(NSData *)newData

63 DataFormat:(BDSAudioFormat)fmt

64 characterCount:(int)newLength

65 sentenceNumber:(NSInteger)SynthesizeSentence{

66 NSLog(@"NewDataArrived data len: %lu", (unsigned long)[self.playerData length]);

67 [self.playerData appendData:newData];

68

69

70 NSMutableDictionary* sentenceDict = nil;

71 for(NSMutableDictionary *dict in self.synthesisTexts){

72 if([[dict objectForKey:@"ID"] integerValue] == SynthesizeSentence){

73 sentenceDict = dict;

74 break;

75 }

76 }

77 if(sentenceDict == nil){

78 NSLog(@"Sentence ID mismatch??? received ID: %ld\nKnown sentences:", (long)SynthesizeSentence);

79 for(NSDictionary* dict in self.synthesisTexts){

80 NSLog(@"ID: %ld Text:\"%@\"", [[dict objectForKey:@"ID"] integerValue], [((NSAttributedString*)[dict objectForKey:@"TEXT"]) string]);

81 }

82 return;

83 }

84 [sentenceDict setObject:[NSNumber numberWithInteger:newLength] forKey:@"SYNTH_LEN"];

85 [self refreshAfterProgressUpdate:sentenceDict];

86}

87

88- (void)synthesizerTextSpeakLengthChanged:(int)newLength

89 sentenceNumber:(NSInteger)SpeakSentence{

90 NSLog(@"SpeakLen %ld, %d", SpeakSentence, newLength);

91 NSMutableDictionary* sentenceDict = nil;

92 for(NSMutableDictionary *dict in self.synthesisTexts){

93 if([[dict objectForKey:@"ID"] integerValue] == SpeakSentence){

94 sentenceDict = dict;

95 break;

96 }

97 }

98 if(sentenceDict == nil){

99 NSLog(@"Sentence ID mismatch??? received ID: %ld\nKnown sentences:", (long)SpeakSentence);

100 for(NSDictionary* dict in self.synthesisTexts){

101 NSLog(@"ID: %ld Text:\"%@\"", [[dict objectForKey:@"ID"] integerValue], [((NSAttributedString*)[dict objectForKey:@"TEXT"]) string]);

102 }

103 return;

104 }

105 [sentenceDict setObject:[NSNumber numberWithInteger:newLength] forKey:@"SPEAK_LEN"];

106 [self refreshAfterProgressUpdate:sentenceDict];

107}3.2.3 初始化SDK

- 示例代码

1// 初始化日志配置 默认0 不打印日志 6是打印全部日志

2[self.asrEventManager setParameter:@(EVRDebugLogLevelTrace) forKey:BDS_ASR_DEBUG_LOG_LEVEL];

3// 环境信息设置:

4// 识别环境 - pid. 邀测环境PID统一为4144779,仅供体验使用。生产环境使用请联系技术支持获取专属PID

5[self.asrEventManager setParameter:@"4144779" forKey:BDS_ASR_PRODUCT_ID];

6// 识别环境 -key,

7[self.asrEventManager setParameter:@"com.baidu.app" forKey:BDS_ASR_CHUNK_KEY];

8// 识别环境 - url,

9[self.asrEventManager setParameter:@"https://audiotest.baidu.com/v2_llm_test" forKey:BDS_ASR_SERVER_URL];

10

11 NSString *deviceId = [self.asrEventManager getDeviceId];

12 // 获取uuid

13 NSUUID *vendorUUID = [[UIDevice currentDevice] identifierForVendor];

14 NSString *uuidString = [vendorUUID UUIDString];

15 [self.asrEventManager setParameter:uuidString forKey:BDS_ASR_CUID];

16 NSDictionary *extraDic = @{

17 @"params": @{

18 @"sessionId": uuidString // 随机生成 session id

19 }

20 };

21[self.asrEventManager setChunkPamWithBdvsID:@"6973568c17a9d84040fe2abe365eb5e3_refactor" // 设置 bdvs 协议,其中 extraDic 为协议内容

22 deviceID:deviceId

23 extraDic:extraDic

24 queryText:nil];

25 NSString *pam = {...//参考demo传递参数具体内容}

26 [self.asrEventManager setParameter:pam ?: @"" forKey:BDS_ASR_CHUNK_PARAM];3.2.4 开始识别

1// 开始识别阶段参数设置

2// 设置VAD/TriggerMode/双工,请参考**开发场景**根据您的业务需求设置。下面示例为双工识别场景。

3// 开启VAD

4[self.asrEventManager setParameter:YES forKey:BDS_ASR_VAD_ENABLE_LONG_PRESS];

5// 是否启动双工

6[self.asrEventManager setParameter:@(0) forKey:BDS_ASR_ENABLE_MUTIPLY_SPEECH];

7// trigger 1: 点按识别; 2: 唤醒后识别

8[self.asrEventManager setParameter:@(EVR_TRIGGER_MODE_WAKEUP) forKey:BDS_ASR_TRIGGE_MODE];

9// 必选:设置音频压缩类型,

10[self.asrEventManager setParameter:@(EVR_AUDIO_COMPRESSION_OPUS) forKey:BDS_ASR_COMPRESSION_TYPE];

11// 是否返回识别中间结果 调用开始识别接口

12[self.asrEventManager setParameter:YES forKey:BDS_ASR_COMPRESSION_TYPE];

13// 调用BDS_ASR_CMD_START 开始识别

14[self.asrEventManager sendCommand:BDS_ASR_CMD_START];3.2.5 停止识别

停止识别,但保留当前识别结果。已经发送的音频会正常识别并生成响应音频。

- 示例代码

1[self.asrEventManager sendCommand:BDS_ASR_CMD_STOP];3.2.6 取消识别

取消识别,并停止后续处理。已经发送但是还没有识别和响应的数据将会丢弃。

- 示例代码

1[self.asrEventManager sendCommand:BDS_ASR_CMD_CANCEL];3.2.7 暂停识别

- 示例代码

1NSMutableDictionary *params = [NSMutableDictionary dictionaryWithCapacity:2];

2// _lastAsrsn为语音识别对应的sn,需要通过VoiceRecognitionClientWorkStatus的回调函数获取。参考下面的代码。

3[params setValue:_lastAsrsn forKey:BDS_ASR_SN];

4[self.asrEventManager sendCommand:BDS_ASR_CMD_PAUSE withParameters:params];

5

6// 在回调函数中获取sn

7- (void)VoiceRecognitionClientWorkStatus:(int)workStatus obj:(id)aObj {

8 // 回调obj做解析处理 拿到sn

9 NSDictionary *dict = (NSDictionary *)aObj;

10 if (dict) {

11 NSDictionary *ttsResult = [dict objectForKey:@"origin_result"];

12 if (ttsResult && [ttsResult isKindOfClass:[NSDictionary class]]) {

13 NSString *txt = [ttsResult objectForKey:@"tex"];

14 if (txt.length) {

15 [self printLogTextView:[NSString stringWithFormat:@"CALLBACK: ChunkTTS %@.\n", txt]];

16 }

17 // 此处获取sn

18 cTTS_ASR_SN = [dict objectForKey:BDS_ASR_CANCEL_TTS_SN_A];

19 }

20}3.2.8 恢复识别

- 示例代码

1[self.asrEventManager sendCommand:BDS_ASR_CMD_RESUME];3.3 开发场景

SDK支持三种开发场景:

- 双工识别:启动后可以多次进行语音对话,直到用户主动停止识别

- 点按短语音识别:启动后进行60s内的单个短句对话。 // TODO: 补充详细描述

- 长按识别:启动后按住按钮不松开会持续识别,且不会进行断句

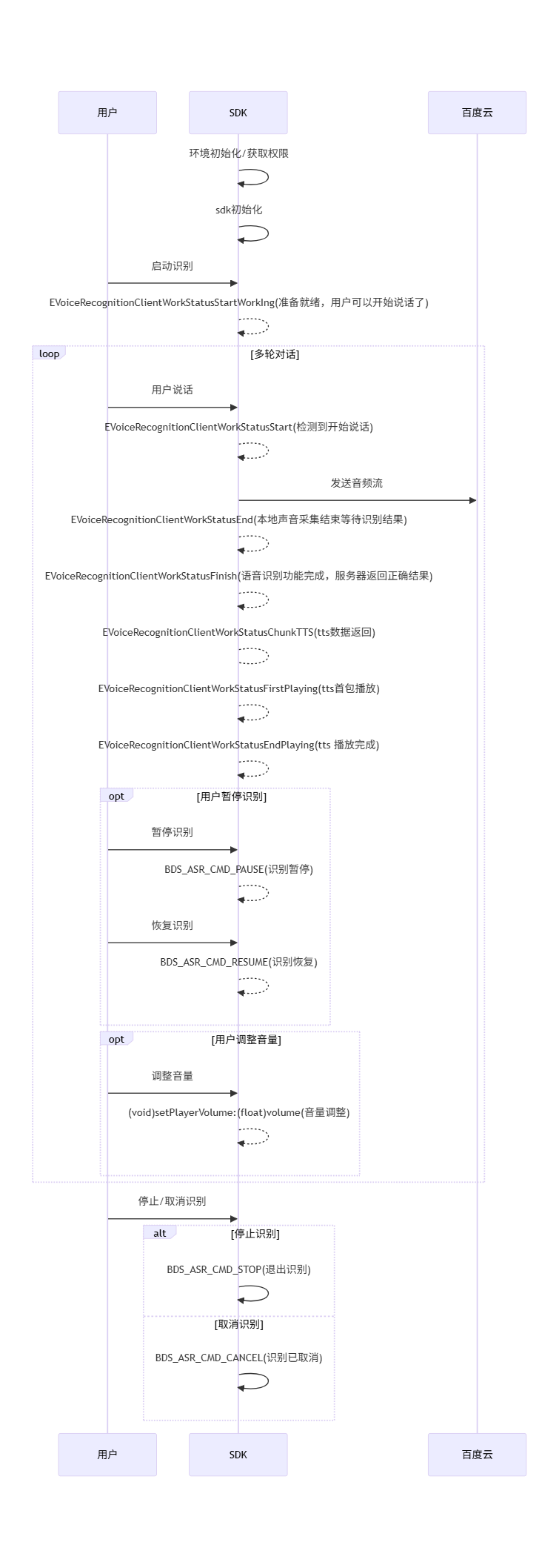

3.3.1 双工识别

- 设置方法

1- (IBAction)voiceRecogButtonTouchDown:(id)sender {

2 // 双工模式识别

3 [self.asrEventManager setParameter:@(1) forKey:BDS_ASR_ENABLE_MUTIPLY_SPEECH];

4 // 默认点击方式触发 1.点击方式触发 2.唤醒方式触发

5 [self.asrEventManager setParameter:@(EVR_TRIGGER_MODE_CLICK) forKey:BDS_ASR_TRIGGE_MODE];

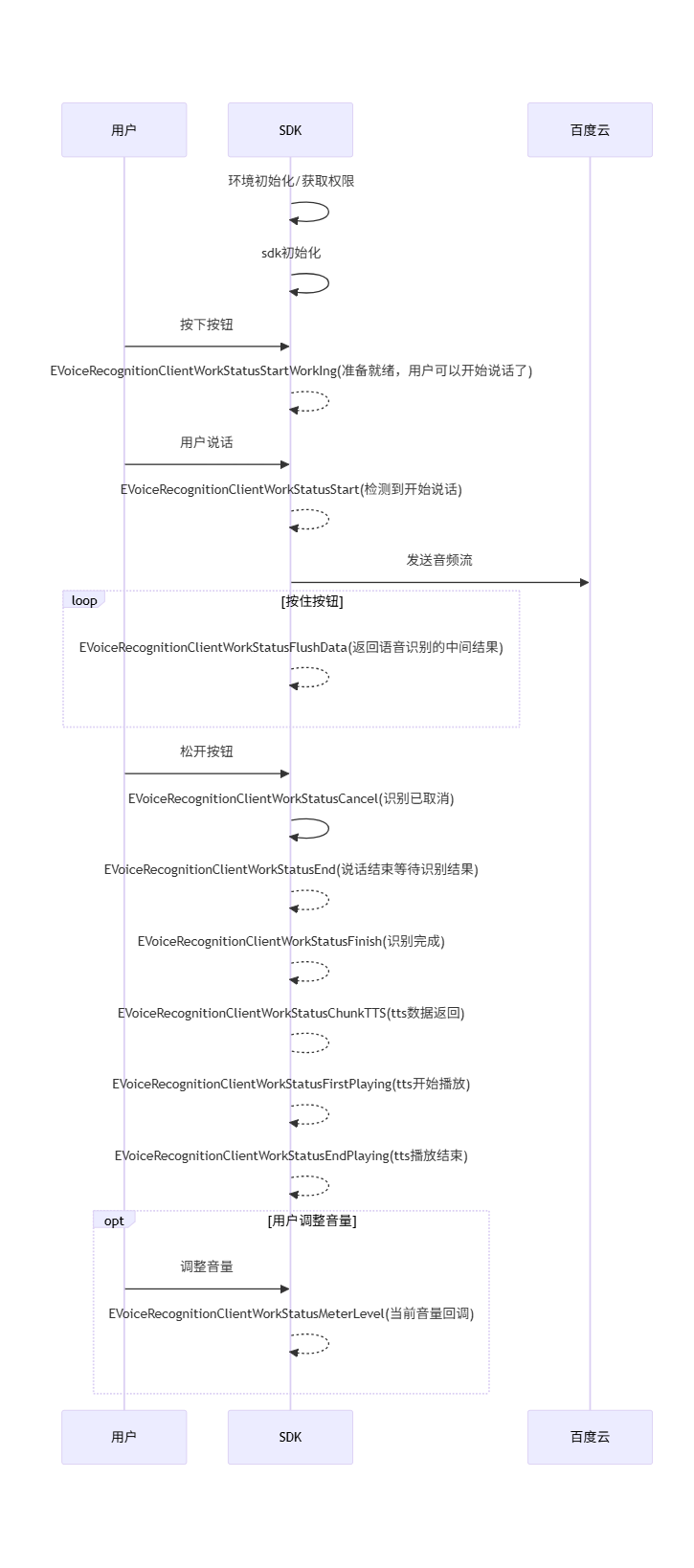

6}- 交互流程

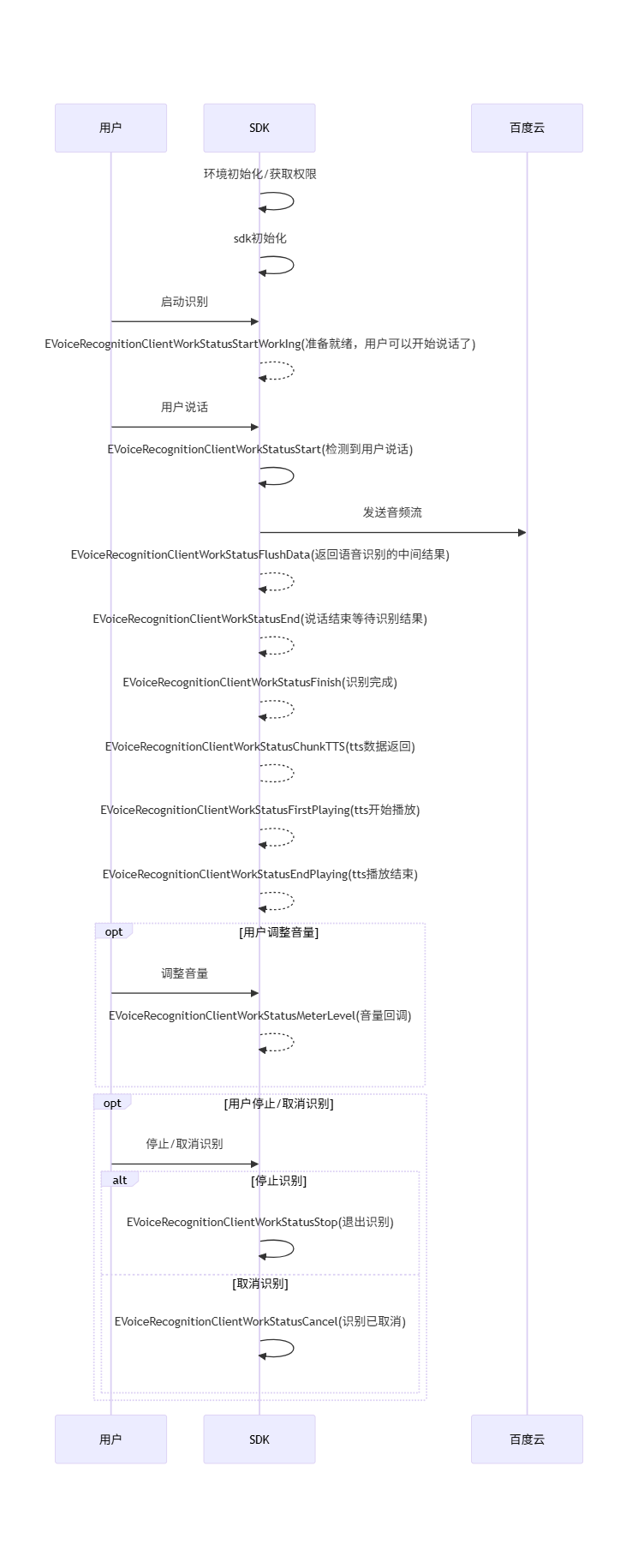

3.3.2 点按短语音识别

- 设置方法

1// .... 其他参数已忽略

2 // 点按

3 self.longPressFlag = NO;

4 // 双工模式识别

5 [self.asrEventManager setParameter:@(1) forKey:BDS_ASR_ENABLE_MUTIPLY_SPEECH];

6 // 默认点击方式触发 1.点击方式触发 2.唤醒方式触发

7 [self.asrEventManager setParameter:@(EVR_TRIGGER_MODE_CLICK) forKey:BDS_ASR_TRIGGE_MODE];- 交互流程

3.3.3 长按识别

- 设置方法

1// .... 其他参数已忽略

2 // 点按

3 self.longPressFlag = YES;

4 // 双工模式识别

5 [self.asrEventManager setParameter:@(1) forKey:BDS_ASR_ENABLE_MUTIPLY_SPEECH];

6 // 默认点击方式触发 1.点击方式触发 2.唤醒方式触发

7 [self.asrEventManager setParameter:@(EVR_TRIGGER_MODE_CLICK) forKey:BDS_ASR_TRIGGE_MODE];- 交互流程

3.4 SDK错误码

| 错误码 | 含义 |

|---|---|

| 655361 | 录音设备异常 |

| 655362 | 无录音权限 |

| 655363 | 录音设备不可用 |

| 655364 | 录音中断 |

| 655365 | 大量空白音频 |

| 1310722 | 用户未说话 |

| 1966082 | 网络不可用 |

| 1966084 | 解析url失败 |

| 2031617 | 请求超时 |

| 2031620 | 本地网络联接出错 |

| 2225211 | 服务端交互发生错误 |

| 2225213 | 等待上行流建立超时 |

| 2225218 | 语音过长 |

| 2225219 | 声音不符合识别要求 |

| 2225221 | 没有找到匹配结果 |

| 2225223 | 协议参数错误 |

| 2625535 | 识别繁忙 |